Raspberry Pi AI HAT+ 2: A Gamble on AI Workloads or a Misfire?

At $130, Raspberry Pi's AI HAT+ 2 promises to free up your Pi's CPU for AI projects—but its flawed code outputs and software instability make it feel like paying for a headache.

Offloading the work from the Raspberry Pi's CPU makes the AI HAT+ 2 an interesting prospect, but the flawed results make this a bit of a gamble. The device answered a question in 13.58 seconds compared to 22.93 seconds on the Pi 5 CPU, though both produced incorrect results.

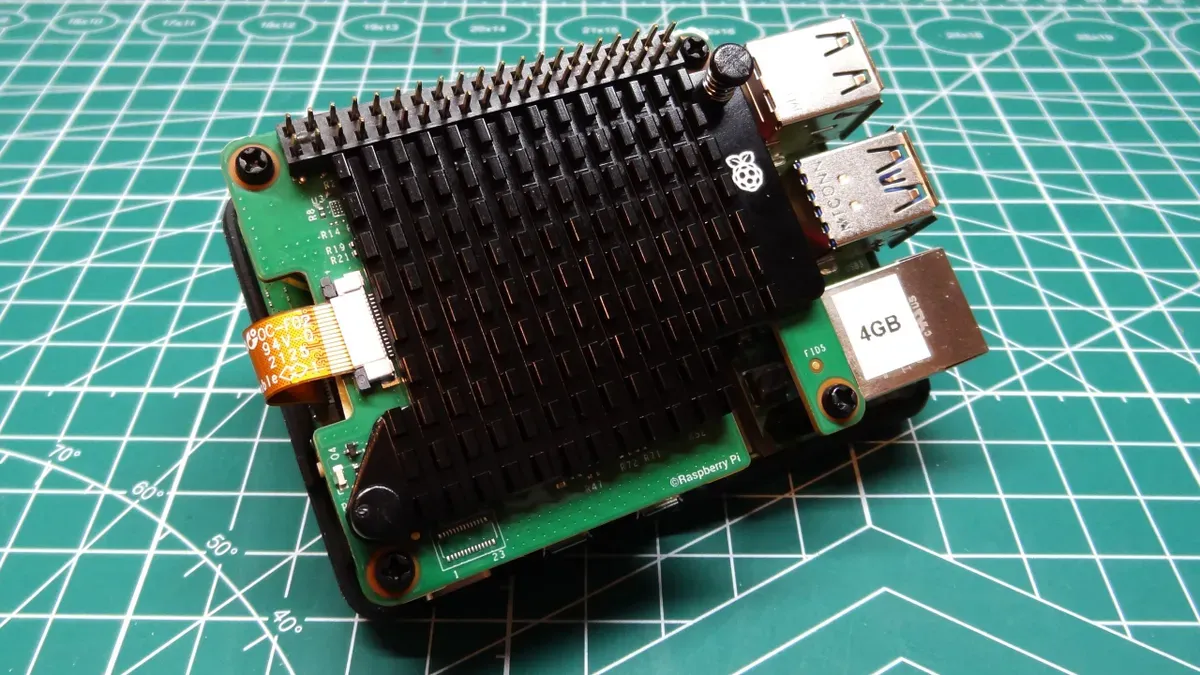

It supports Qwen, DeepSeek, and Llama3.2 models via hailo-ollama, with hardware including 8GB DDR4X RAM and the Hailo-10H chip (40INT4/26TOPS), an upgrade from the previous Hailo-8/8L (13-26TOPS).

However, software issues persist, including 'HailoRT not ready!' errors and flawed Python code output. The target audience remains GPIO-based projects needing local LLM integration, not vision-based AI work. Older AI HAT+ versions offer cheaper alternatives for simpler tasks.

The knowledge on which these models have been trained is now outdated. This limitation, combined with unresolved driver problems, raises questions about the HAT+ 2's practical value for developers seeking reliable AI acceleration.