OpenAI ChatGPT Health: Privacy-Sandboxed Health Chats, But Can AI Navigate the Medical Minefield?

OpenAI’s new ChatGPT Health promises to keep your health conversations private—but should you trust an AI with your medical questions when it can’t even tell the difference between a hallucination and a fact?

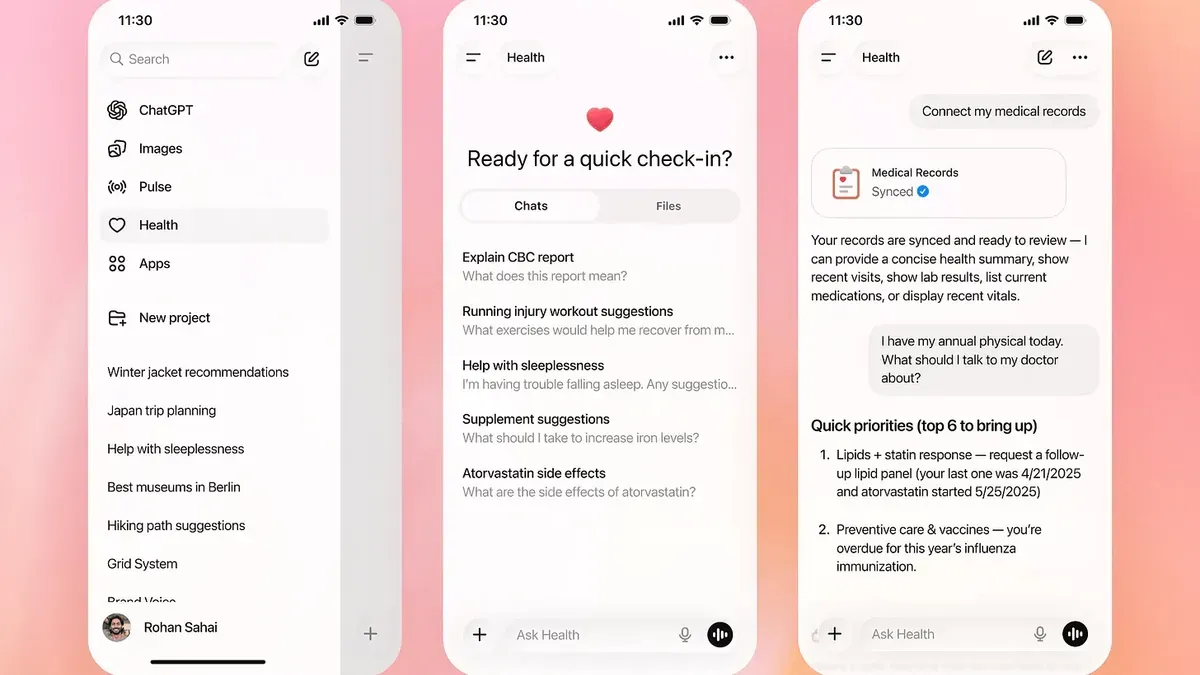

OpenAI announced ChatGPT Health to isolate health discussions from regular chats, citing 230 million weekly health queries. The service integrates with health apps like Apple Health, Function, and MyFitnessPal but explicitly states it will not use this data for model training. This approach aims to address privacy concerns while leveraging health data for user convenience.

"This is a response to existing issues in the healthcare space, like cost and access barriers, overbooked doctors, and a lack of continuity in care," said Fidji Simo, CEO of Applications, OpenAI.

Her stated goals align with broader industry efforts to democratize healthcare access, though large language models (LLMs) remain notoriously unreliable in medical accuracy.

"This is a response to existing issues in the healthcare space, like cost and access barriers, overbooked doctors, and a lack of continuity in care."

OpenAI’s terms of service clarify that ChatGPT Health is "not intended for use in the diagnosis or treatment of any health condition." This disclaimer underscores the inherent limitations of AI-generated medical advice, which can produce plausible but incorrect recommendations.

While the privacy-first design isolates health data from general AI training pipelines, the tool’s utility hinges on users recognizing its advisory role rather than treating it as a substitute for professional care.