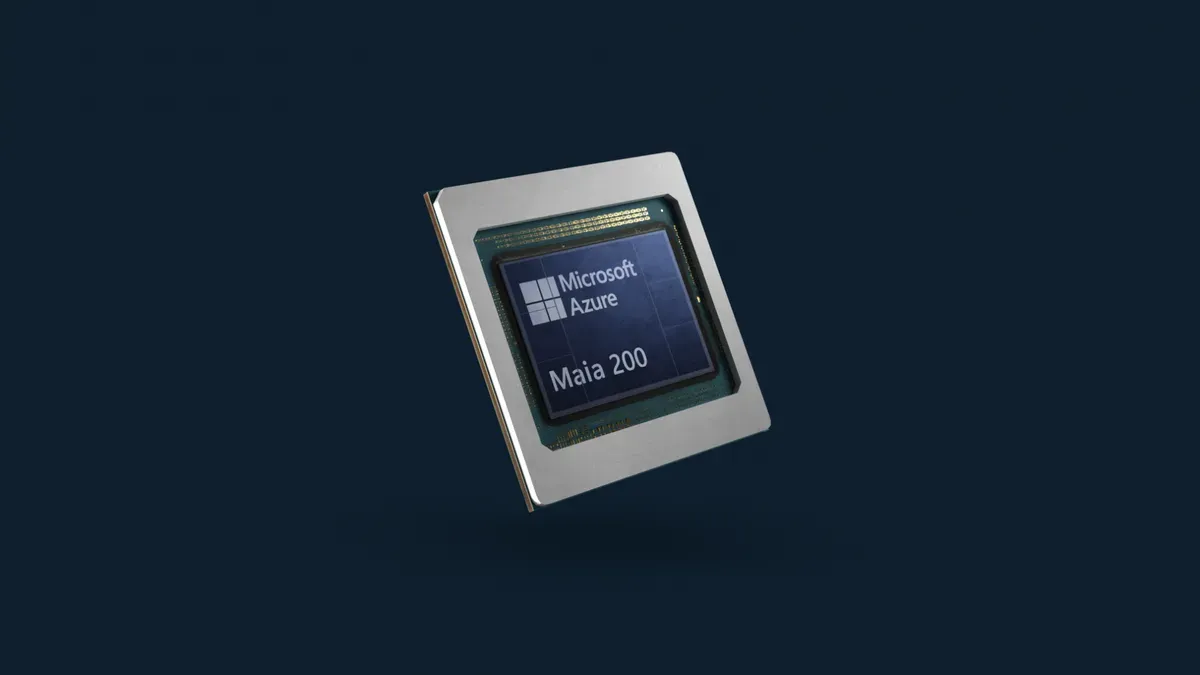

Microsoft’s Maia 200 AI Chip: Faster Than Amazon, Half as Greedy as Nvidia’s Blackwell

Microsoft’s new Maia 200 AI chip claims to outpace Amazon and Google in efficiency—but can it convince the world that AI doesn’t have to burn through power like a crypto rig?

Built on TSMC’s 3nm process with 140 billion transistors, the Maia 200 delivers 10 petaflops of FP4 compute and 216GB of HBM3e memory.

It offers 30% better performance per dollar than its predecessor, the Maia 100, while operating at a 750W TDP—half the 1400W TDP of Nvidia’s B300 Ultra. This marks a 50% increase in TDP over the Maia 100’s operational 500W.

The chip outperforms Amazon’s Trainium3 by threefold in FP4 performance but trails Nvidia’s Blackwell B300 in raw compute (15 petaflops FP4) and TDP.

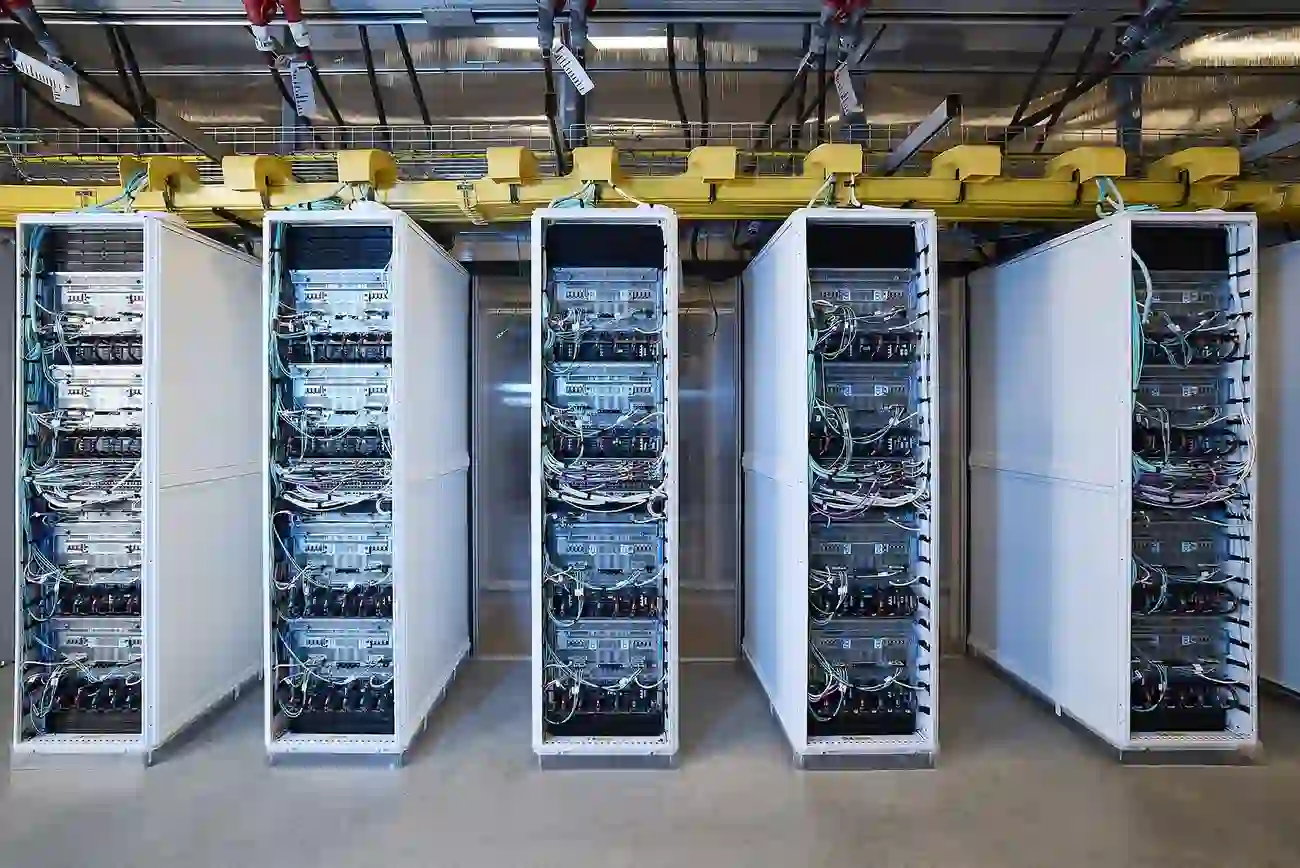

Microsoft has deployed the Maia 200 in its US Central Azure data center, with plans to expand to US West 3 in Phoenix, AZ. Designed for AI inference workloads, the chip prioritizes FP4/FP8 efficiency over complex operations, using a multi-tier SRAM architecture for workload distribution.

Microsoft has emphasized environmental efficiency amid growing public scrutiny of AI’s energy consumption. The Maia 200’s 750W TDP represents a strategic pivot to address concerns about the carbon footprint of AI infrastructure, though it remains higher than the Maia 100’s operational power draw.